Shipping Containers

Prior to the 1960s, the transportation of goods from a farm or factory was a labour-intensive activity as they made their way through a network of trucks, trains and ships. The way in which goods were packed for transportation by truck was typically different to that of a train and different again to that of a ship, so that goods had to be reorganised and repacked as they were repeatedly loaded and unloaded through these different stages of a journey.

A transformation began in the 1960s with the advent of shipping containerisation, which allowed goods to be packaged into containers that were internationally standardised. These goods could be efficiently conveyed and transferred through the global transport network of container-enabled trucks, flat-bed trains, ships and ports without the expense of repacking and reloading at each stage. Later, computers enabled automated tracking and routing of containers, thereby further reducing the costs associated with theft, damage, etc. Shipping times decreased and global trade increased as efficiency improved. The core innovation was that all goods could be handled in the same consistent, stream-lined way across the transport network. Goods were now easily portable.

Software Containers

The software industry is currently experiencing a similar transformation. By adopting the concept of containers, it is beginning to solve the problem that developers have when running software systems on different platforms - where the environment for developing and testing is different to the production environment, which can operate on different clouds, virtual machines, or physical servers. These servers may be different operating systems, installed libraries, different database version or other dependant packages. Software had a similar problem as shipping – it was limited by its portability. How it runs was heavily dependent on the host computer environment.

Software containers allow the entire software stack (with all required dependant packages, libraries and configuration files) to be packaged together into a standardised container image. This abstracts away the details of the runtime environment so that the software has a consistent and reproduceable view-of-its-world. Container images are run by container run-times – and the industry de facto standard is provided by Docker [1]. Like shipping containers, Docker enables software containers to behave in a consistent manner regardless of the underlying host computing environment - software is now much more portable. Docker container images are able to run with their packaged software libraries on; native *nix [2] bare-metal machines, developer laptops and desktop Windows platforms as well as all major public cloud VM platforms – like Amazon Web Services (AWS), Google Cloud Platform (GCP) or Microsoft Azure [3].

Virtual Machines vs Docker

“What about virtual machine images – don’t they allow software packaging like Docker?”

The short answer is – “Yes they do” – but with complications. Virtual machines (VMs) contain entire operating systems that need to be managed and patched and take some time to start and stop. In a public cloud, it can often take several minutes to commission, patch and spin-up a new virtual machine from a VM image. Since Docker containers run as regular *nix threads on their host platforms, they are much more light-weight and are much quicker to start, stop. and delete. Running software packages from different suppliers on a single VM can also impose complex package version requirements – all software typically must use the same version of supporting libraries and other binaries. Basically – Docker containers are, in computing terms, cheap, easy and portable whereas virtual machines are expensive.

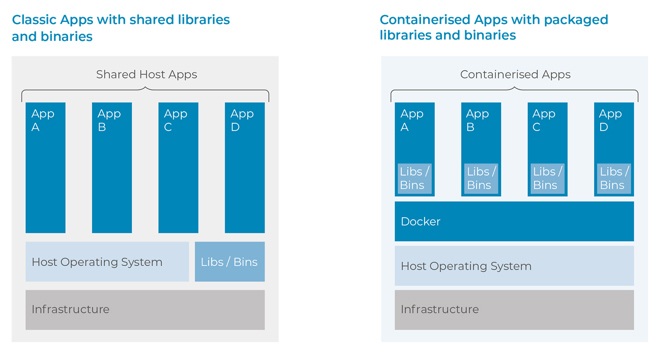

The following diagrams illustrates these points. The classic way of running software is by ensuring that software is installed on host operating systems (either a VM or bare-metal machine) that contain the correct version of shared libraries and other binaries. Only mutually compatible software could be installed within the same host environment. Whereas containerised applications package all the libraries and required supporting binaries within the container image themselves – and run isolated and separated from other Docker containers. Containers typically do not know nor care about the software environment of their host operating system. Containers can run anywhere and are highly portable.

Software Containers at Lendscape

Lendscape is using Docker container technology for its Asset Finance product. This, in conjunction with Kubernetes [4] (a container orchestration framework) enables an even more robust software development, delivery and run-time capabilities for both public and private cloud infrastructures.

In summary:

• Software containers like shipping containers enhance the portability of items in different environments. Simplified tooling and processes produce a better, more reliable service.

• Container runtimes solve some of the same problems as VMs but are cheaper to run than the full O/S overhead of VM

• Lendscape is using software containers for its Asset Finance product.

Up Next

• Look out for a follow-up blog-post about a container orchestration technology (Kubernetes) and how they can improve the software development and deployment lifecycle.

[1] https://www.docker.com/

[2] Unix based operating systems, BSD, Linux, macOS, HP/UX, AIX and others

[3] AWS - Amazon Web Services, GCP - Google Cloud Platform or Microsoft Azure

[4] Kubernetes - https://kubernetes.io/

Author: Todd Sproule, Principle Developer, Lendscape